Startups

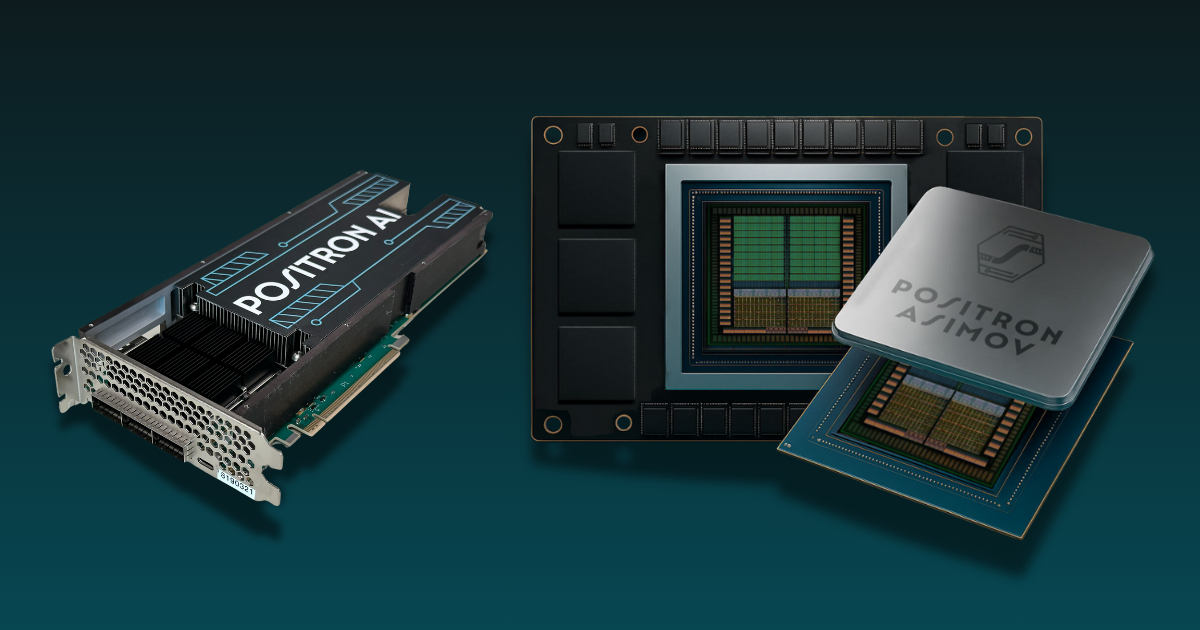

Positron raises $230M Series B to take on Nvidia’s AI chips

The startup is building high-speed memory and inference-focused silicon for the next wave of AI workloads.

The next big constraint in AI infrastructure isn’t only compute—it’s moving data fast enough, cheaply enough, and with predictable latency.

What to know

- Inference optimization is becoming its own battleground as companies try to lower serving costs.

- “Memory bandwidth” and “latency” are as strategic as raw FLOPS for many real-world products.

- Competition with incumbents typically hinges on software ecosystem compatibility and cost curves at scale.

Related

More Startups

Startups

Adaption Labs raises $50M seed to build smaller AI models that learn on the fly

Ex-Cohere researcher Sara Hooker’s new startup is pitching adaptive models designed to improve with use.

Startups

ElevenLabs raises $500M from Sequoia at an $11B valuation

The voice AI startup’s new round fuels expansion and keeps IPO chatter alive.

Startups

EnFi raises $15M to deploy AI credit-analyst agents at banks

The startup is pitching agent-like workflows that automate parts of credit analysis and monitoring.

Startups

SynthBee emerges from stealth with $100M for regulated-industry collaboration

The startup is pitching “collaborative intelligence” for teams working under strict compliance rules.

Startups

Pasito raises $21M Series A for an AI-native employee benefits platform

The company is betting benefits administration can be rebuilt around automation and personalized guidance.

Startups

Startup funding snapshot: early February 2026

A quick roundup of notable February funding announcements across AI, fintech, and automation.